I am the author of iOS 17 Fundamentals, Building iOS User Interfaces with SwiftUI, and eight other courses on Pluralsight.

Deepen your understanding by watching!

An (Almost) TDD Workflow in Swift

There are times when it feels paralyzing to write tests first before any production code is written. Even with good requirements documentation, I often find myself asking, “How am I supposed to write a test to verify x about some thing that doesn’t exist in actual code yet?” It can be crippling.

The following is a workflow that’s helped me grow in my test-first development skills. When I find myself staring at the screen, paralyzed because I’m “not supposed to write actual production code until the test is written”, I often turn to the workflow that I’m about to describe to help me break through to being productive. With practice and experience, I find myself needing this strategy less and less, but I’ve found it helpful to use this (almost) TDD workflow as a gateway into full test-first development.

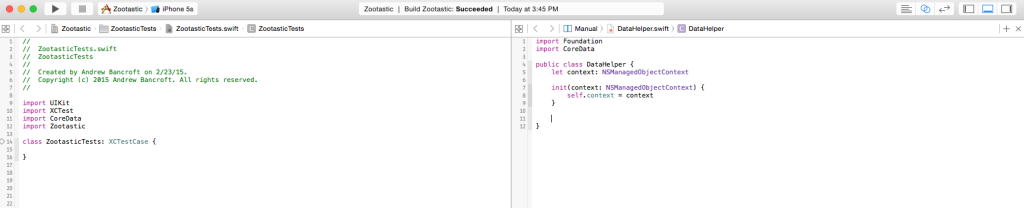

Set up side-by-side view: Test on left | Code on right

My starting place is to always have a test file open on the left, and the actual production code file that I want to write tests for on the right. This does a couple of things for me:

- It helps me avoid a lot of switching back and forth between tests and production code.

- It helps me keep tests at the forefront of my mind. Without seeing them in front of me, I could more easily forget about them. Having the split IDE keeps me conscious of the need to prioritize testing.

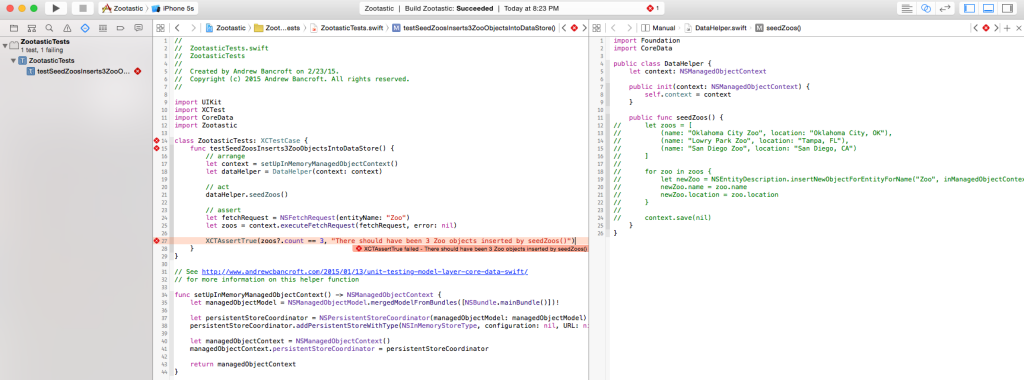

I recently wrote about a technique to seed a Core Data database, and with that post, I included a project called “Zootastic” – a contrived app that modeled the storage and display of Zoos (along with Animals and their Classifications). I created a class called DataHelper which had several seed() methods. For the purposes of having an example before us, suppose that I wanted to test DataHelper. My screen might look something like this, with my tests on the left, and my DataHelper class on the right:

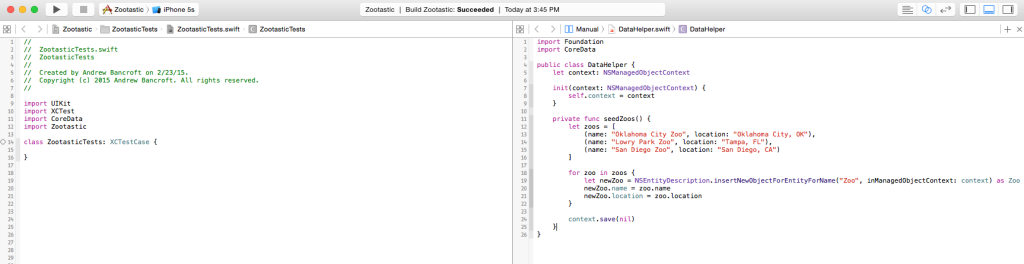

Write the actual production code

What I want is to insert 3 Zoo objects into the Core Data data store. But without the actual code before me, it’s hard to imagine what the test(s) for that code might look like.

When I get stuck in this way, I’ll go ahead and write the actual production code:

One important thing to remember is that you don’t want to write a ton of code in this step… just enough to spark your brain into figuring out what kinds of tests you can write. Write small increments of code. The more you write, the harder it will be to ensure you’ve covered the code and the various edge cases that may exist. Your goal is not to write the app. Your goal is to write a function, or a part of the function – just enough to get you going with tests.

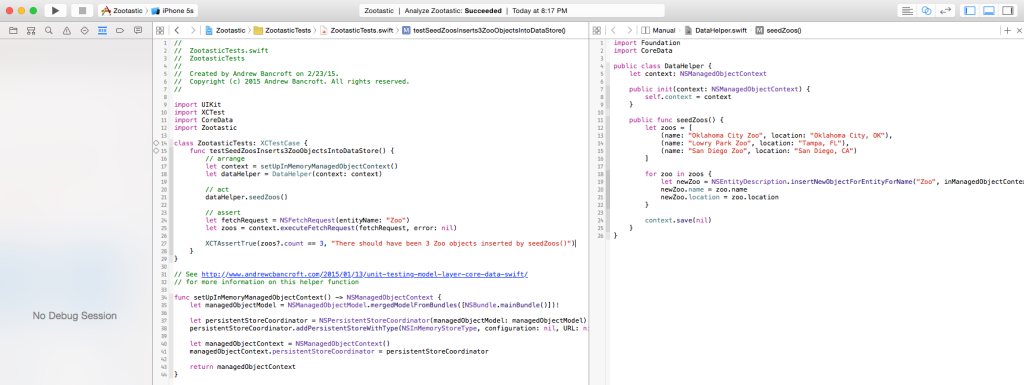

Write a test that will exercise the code

Having some real code with real class names and real function names usually helps me see what I need to do in terms of testing.

In the example I have going, I’d like my seedZoos() function to insert 3 Zoo objects into my CoreData data store.

At this point, it’s pretty easy for me to think of the name of my first test. How about, testSeedZoosInserts3ZooObjectsIntoDataStore():

Comment out the production code so that the test will fail

Running the tests right now would produce a passing test. “Great!”, you say – but here’s my issue with simply running the test, seeing it pass, and moving on without ever having seen it fail:

There are many ways to produce passing tests without actually verifying the results of executing the app’s code.

- I could write a test with no assert. That’d be silly, but forgetting that at the end would produce a green test – and it’s easier to do than you think as you get rolling with these things. Expecting the first time you run the test to produce a failing test would alert you if you ran it the first time and saw a passing one.

- I could write a test that asserts the wrong thing and produces a false positive. Again, expecting “fail” at first would alert me if I saw “pass” at first.

- Suppose I copied and pasted a test and intended to replace the implementation to test my new code. But I get distracted between when I pasted it and when I ran it for the first time. If I ran it, saw “pass” and moved on, the test wouldn’t be doing its job – it’d be testing something that I already tested, and not these new lines of code I just produced!

The point is this: There are too many ways to write a test that doesn’t truly test your code. Suffice it to say, you should always make the test fail so that you know it’s wired up to the right production code. Thus, this crucial step: comment out the production code. It’ll ensure you get a failing test on the first run (if you’re truly testing the right thing).

Run the test and verify that it fails

With the production code I just wrote commented out, I run the test. My expectation at this point is that it will fail, because the seedZoos() function does not currently insert any Zoo objects into the data store.

If the test doesn’t fail, something is wrong. Check the basics: Did you include an assert at the end of the test? Are you exercising the right production code? Continue troubleshooting and re-running the test until it fails.

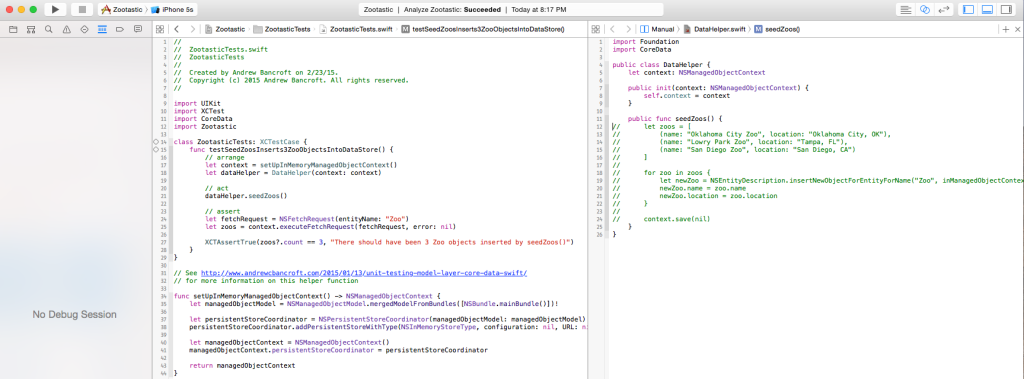

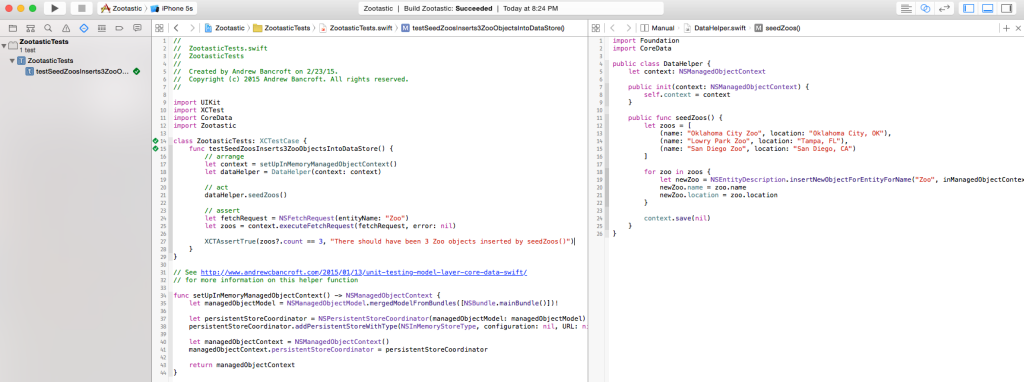

Uncomment the production code so that the test will pass

Once I’ve been able to make the test fail, I uncomment the production code.

The idea here is that once the production code is now “live”, the test that’s currently failing should pass, now that production code is performing appropriate logic to meet the test’s assertion requirements. We know that the test currently fails, so if it passes after we uncomment the production code, the only reason it could pass is because the production code is doing the right thing for that particular test’s assertion. Nothing else about our work environment changed, so nothing else except the uncommented production code could have been the cause of the passing test.

Here’s a view of the IDE in the state right before I run the test again to watch it pass:

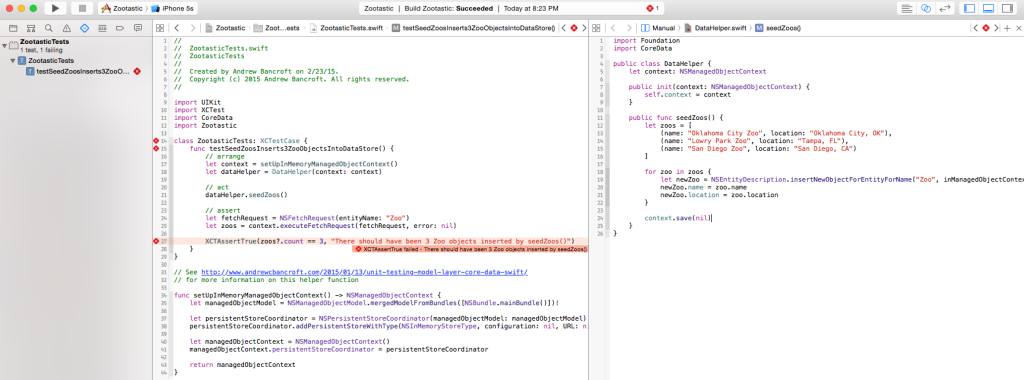

Run the test and verify that it passes

The last step in this (almost) TDD workflow is to run the test one more time. This time it should pass:

If the test doesn’t pass, then something is wrong. Check the basics: Does the test assert the right thing? Does the production code perform correct logic that would satisfy the test’s assertion? Continue troubleshooting and revise the necessary code until you have a passing test.

Rinse and repeat

You can perform this workflow as my times as you need. This is a stepping stone, so the hope is that eventually you’ll be able to write the tests first. It takes a little practice, but using this technique has, in my experience, been a gateway to true Test Driven Development.